AI assisted document archiving

We have always offered Master´s Thesis opportunities to university students to support their academic and professional qualifications. There are more than 40 Master´s Theses completed for Vertex during the years. The research results are implemented in our product development to provide cutting-edge software solutions for our customers. In the “My Master´s Thesis Journey” blog series, our young professionals tell about their Master´s theses and what they have learned and accomplished during the journey.

Backgrounds

I had been working for Vertex Systems Oy already for three years, summers as a summer trainee and other times as a part-time employee, before it was time for me to write my Master’s thesis in January 2021. Since I really liked working here at Vertex and I had already written my bachelor’s thesis a couple year back here in co-operation with Vertex, it was quite clear that I would make also my Master’s thesis under the employment of Vertex Systems Oy.

Deciding thesis topic

Together with my supervisor and CTO we started investigating what would be a suitable topic that would fit with my studies but also with the needs of Vertex Systems. After some time, we all agreed that it would concentrate on some kind of machine learning, or ML for short, based document archiving. This idea popped up because we all knew the struggle of manually trying to keep the record of large document masses and since Vertex Systems had just planned to improve its document archiving capabilities, it was the perfect time to give ML a chance.

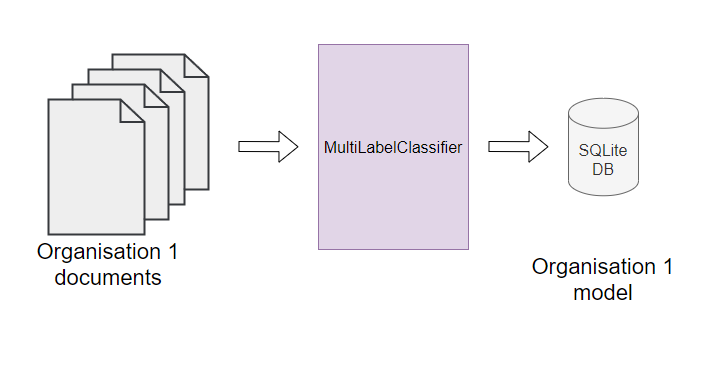

The original idea was to utilize each customer’s own pre-labelled document mass and train a classifier with it and later use that model to provide predictions on documents without labels. This way each customer organization would have their own trained model that can give customized predictions based on that organizations own documents. Official name for the thesis was formed to be “Multi-label classification for industry-specific text document sets”

Even though I had studied machine learning and was quite familiar with its concepts, classification of text documents needed natural language processing, or NLP for short, that was not my strength. Still, I thought it would be necessary to leave the comfort zone to learn something new. And later I found out it was totally worth it.

Basic theory

My thesis process started of course with an information searching phase, in which I read a lot about NLP, investigated for any previous research related to my topic and evaluated the relevancy of the publications I found interesting. There was quite a lot of information available and that was a positive problem, of course.

I found out a couple of very interesting publications related to solving very similar problems that I was dealing with: There was a lot of text document that needed to be sorted out based on their content. The only drawback of the methods used in these publications was that those labelled each document with only one label while I wanted multi-labelling capabilities.

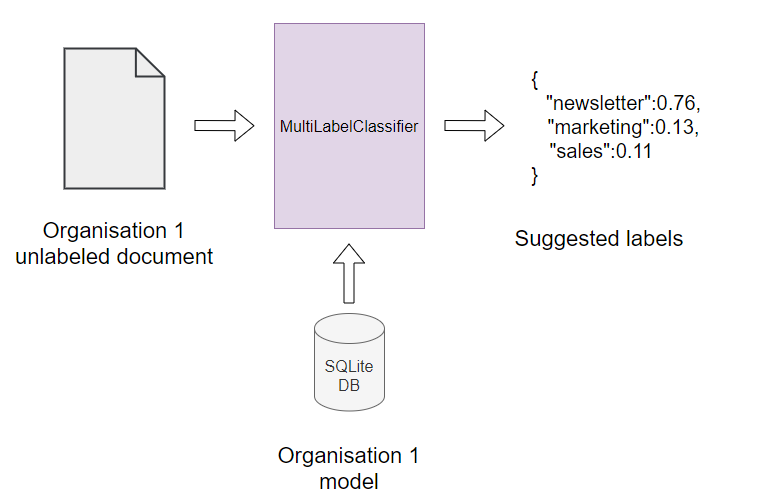

By multi-labelling each document we didn’t have to determine one exact class for each document but rather tell what kind of characteristics it has. The concept is similar to for example movie genre descriptions: a movie can have features of horror and comedy, simultaneously. This way searching for a document containing partially overlapping labels is easier than trying to find one with strictly one label.

Implementation

Normally the standard programming language used development ML applications is Python. However, this time the implementation was intended to act as a Cloud hosted microservice that could take files as input in HTTP requests and return the suitable labels for those files as a response. In addition to that the application had to be containerizable so that it could be to deployed in Kubernetes cluster as a Docker container.

Figure 1 During training phase the classifier takes documents as input and outputs a trained model

Because of these requirements the implementation was done with C# since it is balanced nicely in the middle-grounds or high and low level languages, fits perfectly for crafting the needed data structures and algorithms in the ML side and also provided functionality for building platform independent RESTful services with ASP.NET Core Web API.

Figure 2 In classification phase the classifier uses the previously trained model to provide label suggestions for unlabeled documents

Also, there was one other thing that was unusual for most ML applications: Normally the model used to classify the test instances is persisted in memory, but with this implementation the model was stored in a lightweight SQLite database. Because of this the model was not loaded in memory when needed but rather queried from the database, which reduced the amount of needed memory radically. This was quite a nice feature, since the application was running on a server with limited memory taking requests from possibly thousands of clients from tens or hundreds of organizations, each having their own model trained with the organization’s own documents.

After the thesis

The multi-labelling classifier implemented during my thesis work is yet to be integrated in a production environment. This is mainly because the actual main product is still under construction. I am looking forward how my thesis work will help the customers in the future when it becomes available.

Read also other “My Master´s Thesis Journey” blog posts:

Intelligent assembly constrains